In the time since their debut, we’ve had a glimpse at the initial performance of the GeForce RTX 2070, RTX 2080, and RTX 2080 Ti. Along with an in-depth review of the latter. Now, with the likelihood of a mid-range RTX 2060 graphics card over the horizon, we thought it best to break down NVIDIA’s newest graphics architecture. In order to help you better understand how much more powerful it is than its predecessor, and what it means for the future of PC gaming.

What is Turing?

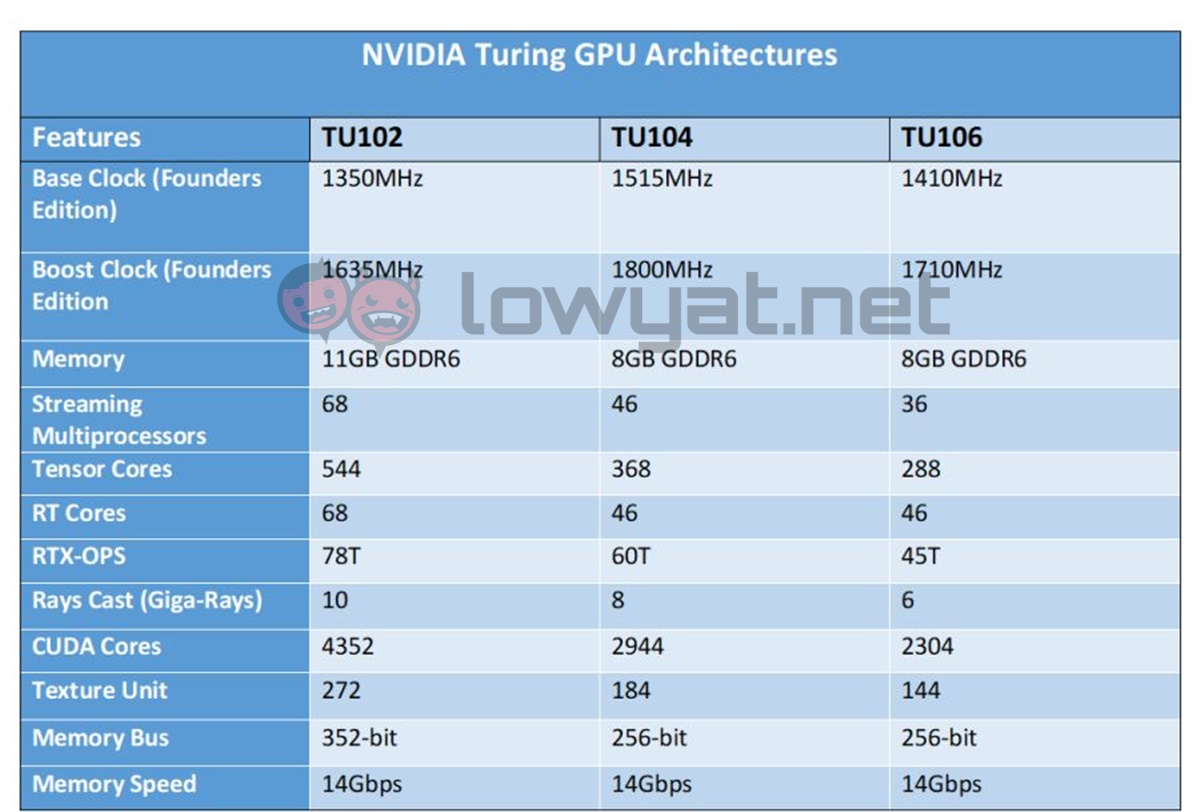

Named after Alan Turing, the British mathematician responsible for decipher the Enigma code, NVIDIA’s Turing GPU is based on a new 12nm FinFET die lithography. It’s also designed to work in tandem with the latest GDDR6 memory technology on GeForce RTX 20 series graphics cards. Specifically for the GeForce graphics cards, the Turing GPU architecture can effectively be split into a total of three variations to it: the TU102, TU104, and TU106. As per NVIDIA’s naming conventions, the TU102 GPU architecture is the cream of the crop. The TU104 and TU106 GPUs are cut from the same cloth, albeit scaled down by varying degrees. To simplify, we’ve created a simple specifications chart to better help you understand the differences between the three GPU architectures:

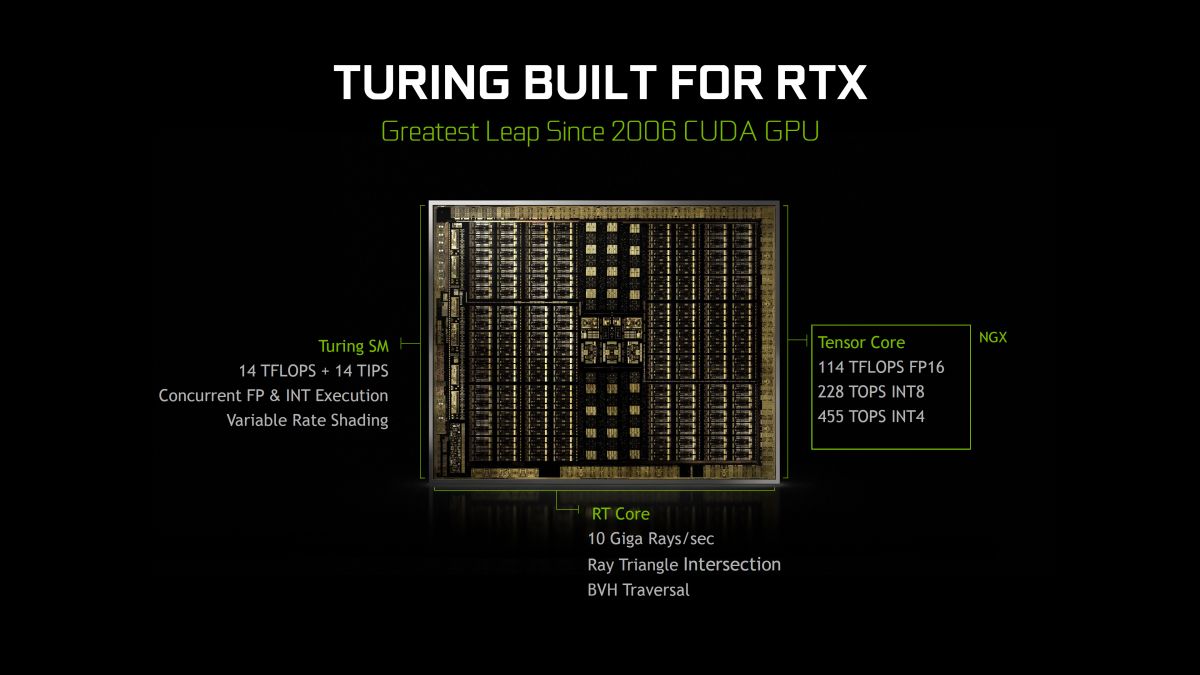

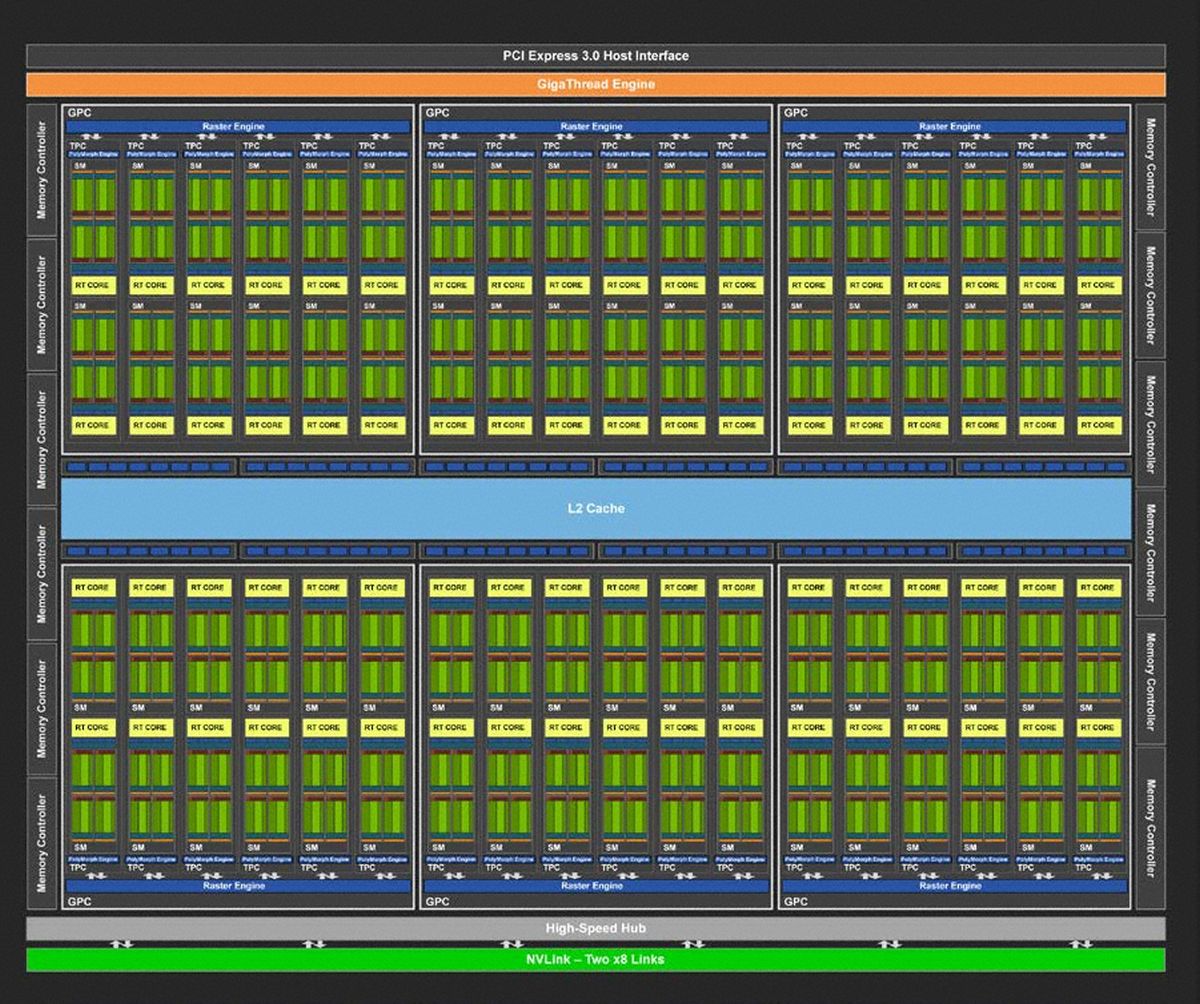

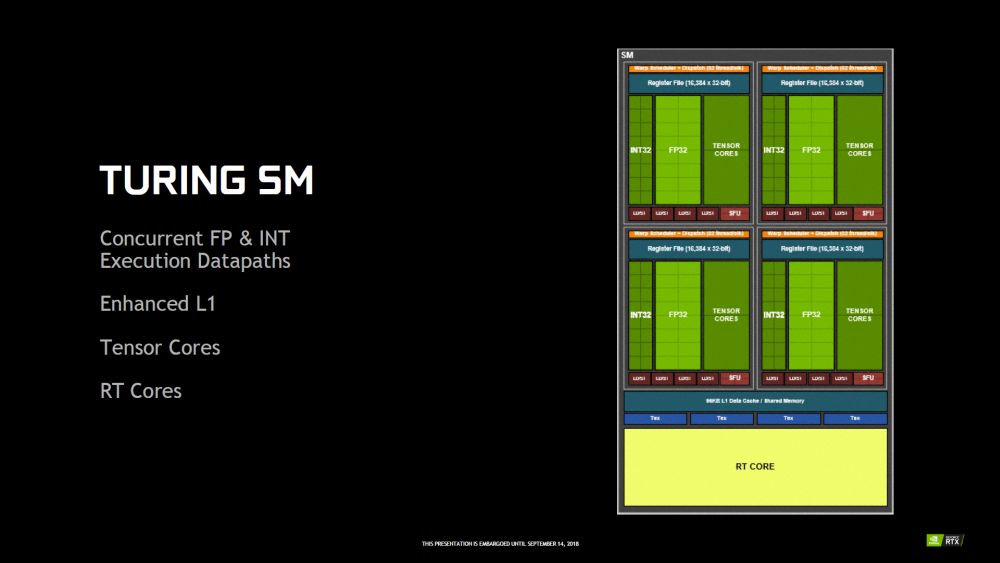

Compared to Pascal, Turing also comes loaded with a whole basket of new additions. This includes a new set of streaming multiprocessors (SM) aptly known as Turing SM. Statistically, the new Turing SM deliver 50% more performance per CUDA Core compared to the previous generation Pascal SM. This is also due to the fact that the new SM unifies several tasks, including shared memory, texture caching, and memory load caching into a single unit. Effectively doubling GPU bandwidth and L1 cache. Aside from the Turing SM, NVIDIA’s new Turing GPU architecture also houses a couple of new core units. Designed to work alongside the currently existing CUDA Cores. These are the newly designed Tensor Cores (TC) and Ray-tracing (RT) Cores (more on that later).

More importantly, Turing – and the byproducts that are the Quadro and GeForce RTX series graphics cards – is also the first GPU architecture that is uses the GDDR6 memory format. By comparison to NVIDIA’s GDDR5X, the new GDDR6 memory is capable of transfer rates of up to 14Gbps. While consuming 20% less power than its predecessor. With all that power, you’re probably now wondering what sort of benefits PC gamers would gain from the new Turing GPU, and how its new features and core units would further improve graphical fidelity. That leads us to one of the GPU’s primary selling points, and the next topic of discussion.

Real-time ray-tracing

To be clear, Ray-tracing technology has been around for a long time. Embraced by game developers and special effects groups alike, the light-altering technique has been the tool of choice of companies seeking to add that extra depth of realism into their work. Rather than simply applying the less demanding method of rasterisation. In NVIDIA’s case, the company decided to take ray-tracing to the next step by making it react to an environment in real-time instead of just allowing the technology to create near photo-realistic renders of static objects. Naturally, pulling off such a feat would require an immense amount of computational and graphical power. This is where the Turing GPU and its embedded RT Cores comes into the picture. Depending the Turing architecture, current owners of NVIDIA’s new GeForce RTX 20 series graphics cards have access to a maximum of 68 RT Cores, and a minimum of 36 RT Cores (for the yet to be released RTX 2070). With the new RT Cores, Turing-equipped GPUs such the GeForce RTX series graphics cards are able to perform ray-tracing computations in-game at the GPU’s processing speeds of 10 Giga Rays per second. And yes, Giga Rays is a new unit of measurement created by NVIDIA to describe its new RTX technology.

To be fair, most games already showcase an incredible amount of realism that’s brought about with the current level of in-game lighting and physics. With the addition of RT Cores, that realism is essentially cranked into overdrive, transforming and making light sources in-game to act more realistically. Just as light does in the real world. The end result is that surfaces, textures, and environments become more reactive to light sources. Shadows look more realistic with an umbra and penumbra; the creation of both a deep, dark, and hard shadow. Offset by the presence of a less pronounced and softer shadow around its edges. All of which are determined by the strength and direction of the light source, of course.

Better efficiency with NGX

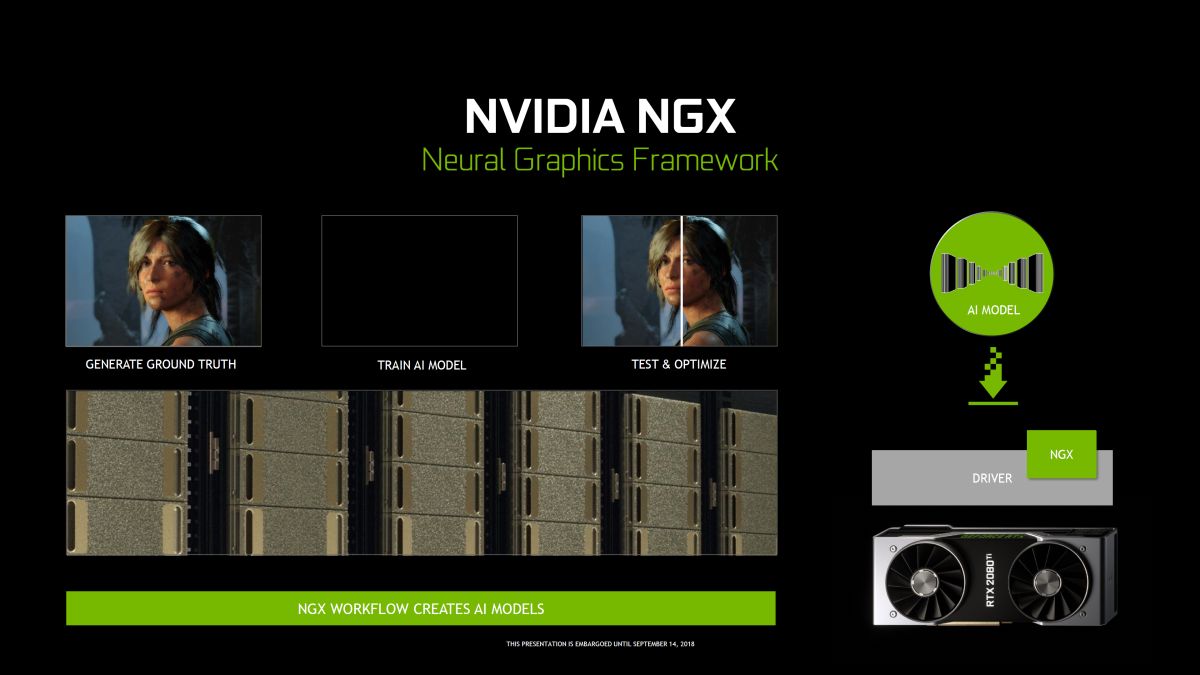

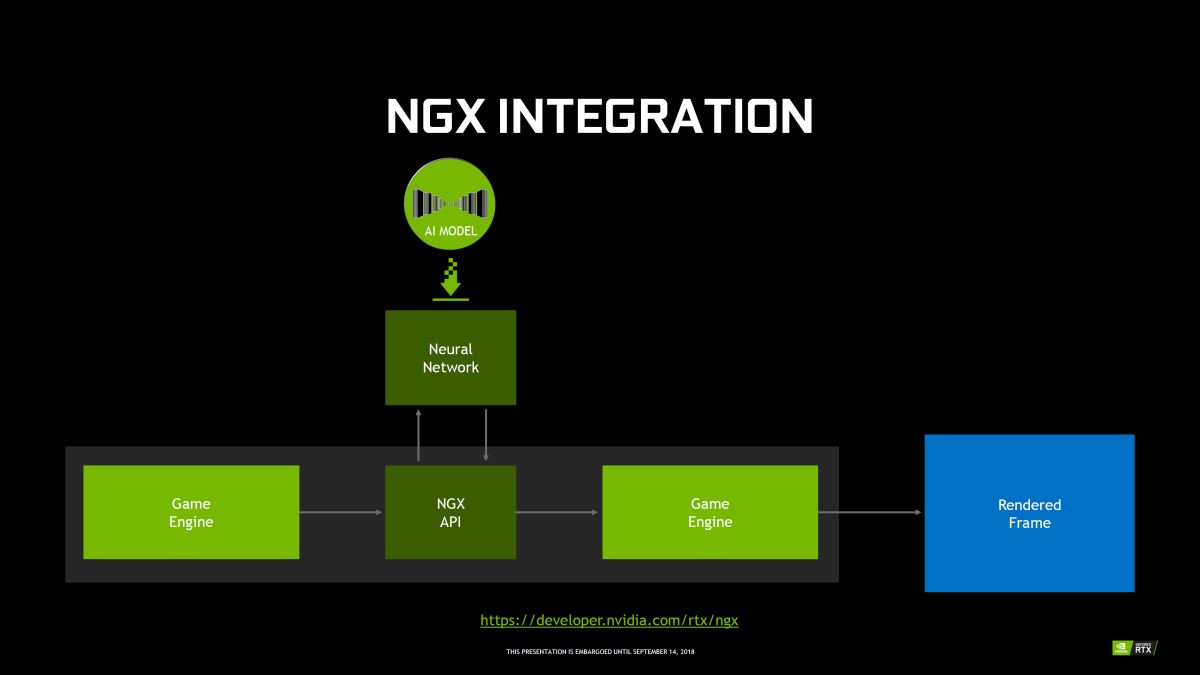

One feature that is pretty much exclusive to the Turing GPU is the NVIDIA Neural Graphics Acceleration, or simply NGX. To be fair, NGX isn’t new. It’s a feature that has been used by NVIDIA in their Deep Neural Networks (DNN) for deep learning operations (along with its Tensor Cores). There are multiple applications of NGX, but in this case, we’ll only be focusing briefly on a select few that relate more to the existence of Turing.

The first – and perhaps one of the more notable – feature that benefits from the presence of NVIDIA’s NGX is a new form of anti-aliasing known as Deep Learning Super Sampling, or DLSS. The process is a rather interesting one. Unlike both traditional and current methods of supersampling (or anti-aliasing), video game developers are required to provide NVIDIA with their game and accompanying coding. Upon receiving their game titles, NVIDIA then puts them through their proprietary AI servers. The idea is that this “trains” the company’s servers to recognise a game’s environmental coding and graphical settings. Upon having completed that training, any and all information pertaining to that game will be store within NVIDIA’s database, and ready to be deployed as a driver update (either as a Day-1 update or a simple patch).

The end result of this procedure is DLSS being able to render a highly detailed image that is of much higher quality than a similar image produced with Temporal Anti-Aliasing (TAA). On top of that, DLSS will also render that aforementioned image at a lower input sample count and more efficient rate. As another example to this feat; the RTX 2080 Ti is capable of rendering 4K DLSS images at twice the performance output of a Pascal-powered GTX 1080 Ti rendering a 4K TAA image.

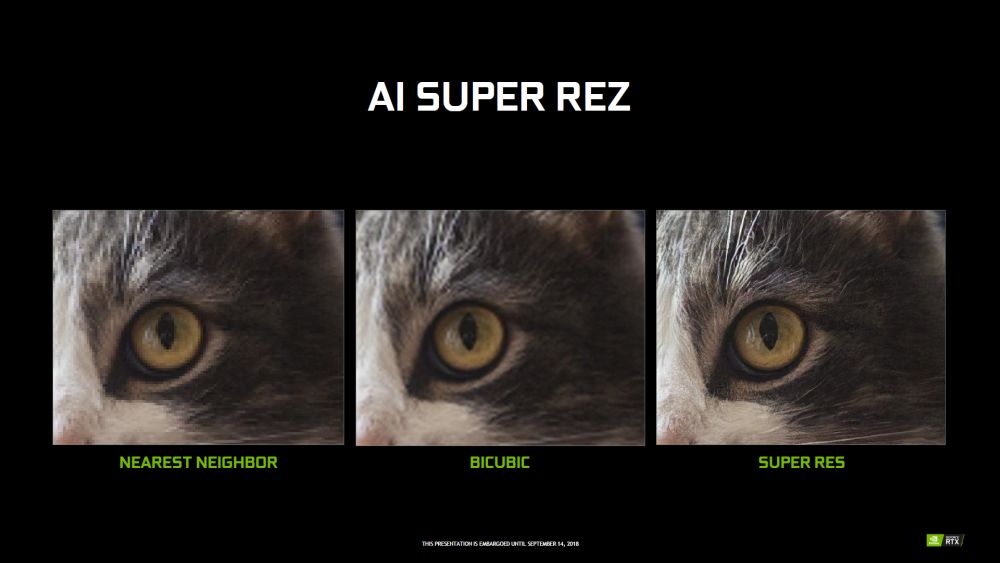

Besides DLSS, NGX also give the Turing GPU architecture the benefit of utilising NVIDIA’s AI Super Rez technology. Unlike the traditional filtering methods that would stretch out existing pixels and filter between them, AI Super Rez utilises the Tensor Cores of the Turing GPU. Creating new pixels through intelligent interpretation of the image in question, and then placing those pixels in the appropriate areas. The result is an image that is correctly preserves the depth of field (among other aspects) that is usually lost in image enlargement. It’s important to note that NGX doesn’t function on any other GPU architectures prior to Turing, so those of you still using a graphics card running on the Pascal GPU architecture or older are out of luck. On the upside, NVIDIA has assured the consumers that NGX-trained titles will easily interface with the latest DirectX and Vulkan APIs.

NVLink comes to the masses

Turing’s arrival also brings about another piece of technology to the masses: the second generation of its NVLink interface technology. Initially available for use with its Deep Learning and AI-driven Pascal and Volta GPUs, NVIDIA is clearly optimistic about NVLink becoming its new bridge for multi-GPU configurations. Before you get all excited, we should point out that the Turing-powered GeForce RTX series cards only still support SLI over the NVLink bus. This means that any multi-GPU configuration will still retain the same “Master and Slave” arrangement. Rather than a mesh-like configuration, which means that no one card is a master or slave.

In terms of bandwidth speed, the new NVLink bridge is capable of reaching up to 100GB/s of bidirectional bandwidth between multiple cards. That’s a whopping 50 times more than the High Bandwidth (HB) SLI bridge’s maximum transfer rate of 2GB/s. It should also be pointed out that NVLink is only available cards RTX series card powered by the TU102 and TU104 GPU architecture. Meaning that, at this point, only the GeForce RTX 2080 Ti and RTX 2080 graphics cards will be capable of making full use of the feature in future.

Are there any RTX-ready games?

At this time, the only game title that is RTX-ready is Battlefield V. However, during Gamescom 2018, NVIDIA did show off a list of other games that would benefit from its new Turing GPU and real-time ray-tracing technology. Naturally, that list is still growing, and we do advise that you check out that list yourself at NVIDIA’s official page. Of course, if you followed the company’s Gamescom keynote closely, you’ll know that part of that lists include several triple-A titles such Battlefield V, Metro Exodus, and Shadow of the Tomb Raider.

As mentioned earlier, we’ve already conduction some initial tests with all three cards, plus an in-depth review of NVIDIA’s gaming-grade king of the hill RTX 2080 Ti. In the meantime, we’ll definitely be publishing an in-depth review of the RTX 2070 soon as well, along with another story in which we will be comparing the differences between having RTX feature on and off on all the cards. Until then, stay tuned and do check back with us later.